Information

HeaRTDroid

Software

HeaRTDroid

HeaRTDroidHeaRTDroid is a rule-based inference engine both for Android mobile devices, and desktop solutions

Information

HeaRTDroid

Software

There are two ways of interacting with HeaRTDroid:

Both of these methods are equivalent, although Java API allows for more flexibility than commandline shell, obviously. Below thee two approaches are described with the emphasis on three most important factors that impacts the reasoning:

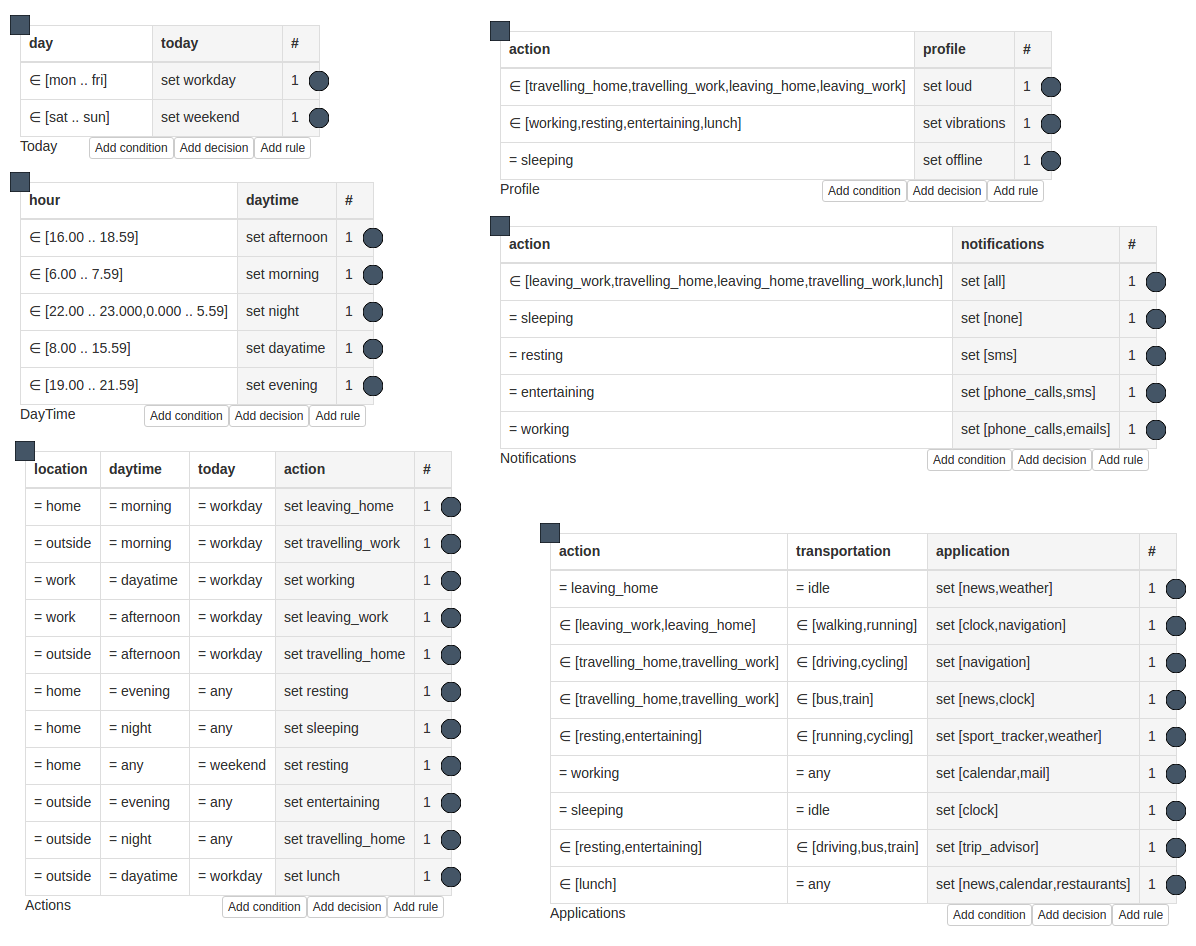

For the purpose of this tutorial we will use the simplified (and not completed) version of a model presented in: Uncertainty handling in rule-based mobile context-aware systems. The model uses informatio about current time, day and user location and transportation mode to derive either the applications that should be recommended to the user, mobile phone profile (silent, loud, vibrations, etc.), or types of notifications that should be presented to the user on the locked screen. These three different decisions are produced by three separate tables: Application, Profile and Notification, respectively. The full model is presented in the Figure below:

The HMR file with callbacks for obtaining hour and day of a week can be downloaded from here.

HeaRTDroid supports three different types of inference: Fixed order, Data driven and Goal driven. We should chose the inference mode depending on the goal we want to achieve. The next section discusses different use case scenarios to demonstrate when each of the reasoning modes is useful.

There are three reasoning modes available in HeaRTDroid:

In the following Figure DDI and GDI inference modes were presented (FOI was skipped, being trivial). In the Figure, every mode can be tokenized, meaning that only tables with sufficient number of tokens can be processed. This feature is not yet released in stable version.

The following paragraphs gives more intuition on when each of these modes is useful.

In FOI mode, the list of tables to process is given explicitly. No additional crawling over the network of tables is done, hence this mode is the fastest one. In case when each reasoning cycle has to process specific set of tables is specific order that do not change – this mode might be a best choice.

It can also be useful for testing the model, since you can separately run the inference on different tables to see how they handle incomplete or uncertain data.

This mode may also be useful if you use looped tables (i.e. tables that uses the same attribute in conditional and in decision part. Such tables are not recommended, but possible) along with the uncertainty management for cumulative rules (i.e. rules that use the same attributes in decision part, but are spread over different tables – see Managing uncertainty with Certainty Factors Algebra tutorial) This is because in the DDI and GDI modes the order of tables that have the same schemas is non-deterministic. However, the order may be important in case of looped tables and uncertainty evaluation.

In DDI mode the engine treats the XTT2 network as a directed graph and uses BFS algorithm to build the list of tables to process.

It takes as a parameter a subset of these tables from which the inference should start.

The BFS algorithm is then launched treating every initial table as a starting node.

Every table that the algorithm visits is placed on a list  in such a way that the paths generated by subsequent BFS launches are concatenated to the beginning of the list.

This assures that the order of the tables will be correct according to forward-chaining paradigm.

in such a way that the paths generated by subsequent BFS launches are concatenated to the beginning of the list.

This assures that the order of the tables will be correct according to forward-chaining paradigm.

The DDI inference strategy interprets the XTT2 model as a direct graph, in which the edges between tables are determined by their schemas.

The edges are directed according to the following pattern.

If schema of a table  is defined as

is defined as  , then the edge from this table to all tables with schema

, then the edge from this table to all tables with schema  , such that

, such that  is created.

Finally, all the elements from list

is created.

Finally, all the elements from list  are placed on the stack.

The stack is then rewritten into resulting stack

are placed on the stack.

The stack is then rewritten into resulting stack  in reverse order.

in reverse order.

This mode is useful, if the model can change over the time, but the initial tables would not. In such a case the explicit list of tables is not possible to be given a priori, bu the DDI mode can build it dynamically. Also, it may be useful when the number of tables to process is relatively large and listing them explicitly might be difficult.

The GDI mode is similar to DDI mode.

The user (or system) gives the algorithm a list of tables (or single table) that produce desired conclusions.

Every table that the algorithm visits is placed on a list  in such a way that the paths generated by subsequent BFS launches are concatenated to the beginning of the list

However, it differs in a way the direction of graph edges is interpreted.

The edges are directed according to the following pattern.

If schema of a table

in such a way that the paths generated by subsequent BFS launches are concatenated to the beginning of the list

However, it differs in a way the direction of graph edges is interpreted.

The edges are directed according to the following pattern.

If schema of a table  is defined as

is defined as  , then the edge from this table to all tables with schema

, then the edge from this table to all tables with schema  , such that

, such that  is created.

Finally, all the elements from list

is created.

Finally, all the elements from list  are placed on resulting the stack

are placed on resulting the stack  .

.

This mode is useful, if the model can change over the time, but the initial tables would not. Additionally, this mode may com in handy when you have a model that produces a lot of different conclusions, but not all of the conclusions are needed in each reasoning cycle.

As an example take the model presented at the beginning of this tutorial. There are three tables that produce different conclusions: Application, Notification and Profile. However, sometimes the system may only need the application recommendation. In such a case processing of Notification and Profile tables will be waste of resources, but also will end up in firing rules which are not related to the actual goal of the invoked inference.

This is when the GDI inference should be used. By specifying which table is the goal table, the system will automatically collect all other tables that are needed to perform reasoning, i.e. Today, DayTime and Action.

There are three types of evaluation of rules in HeaRTDroid:

Both interaction methods (HaQuNa and Java API) support two first modes. The third mode is available as a separate module and can be used either as a standalone application, or as a library via Java API.

Conflict set is understood as a set of rules that have their conditions fulfilled. Different behaviors of the inference engine could be desired in such situations. In HeaRTDroid there are three basic types of conflict set resolution mechanisms, that can work differently depending which uncertainty handling mechanism was chosen. These conflict set resolution strategies are:

The behavior of these strategies changes (or is modified) if uncertainty handling with CF algebra is turned on. In CF evaluation, the rules from the single table are divided into subsets, such that rules that produce the same conclusion (i.e. sets exactly the same value to the attribute from conditional part) are in the same subset. The conflict resolution is applied to these subsets, not to the XTT2 table as a whole. In such a case it may happen (it probably will) that several rules from a single table will be fired even when selecting First or Last strategies.

Please refer to HaQuNa tutorial.

Please refer to HeaRTDroid quickstart section.